Humanitas XAI: Certifiable & Explainable AI — From Concept to Operation

01

Certification-first design

Aligned natively with DO-178C/DO-330 and modern AI governance (ISO/IEC 42001, NIST AI RMF, IEEE 7001/7003), our pipeline integrates compliance from the ground up—not as an afterthought.

02

Explainable by design

Every AI decision is directly linked to its datasets, validated test cases, and documented performance metrics. No black boxes—just full traceability and accountability.

03

Proven multi-layer platform

We unify simulation, digital twins, swarm coordination, and telecom/edge orchestration in a single workflow, delivering real-world resilience and performance.

04

Market Traction

Early collaborations with aerospace research centers and deployments in telecom and swarm simulations confirm the platform’s readiness for mission-critical adoption.

Why Humanitas XAI?

In aerospace, defense, telecom, and other high-stakes industries, “black-box” AI won’t fly. Trust is everything: every decision must be explainable, certifiable, and audit-ready.

That’s exactly what Humanitas AI delivers: a full AI pipeline—from mission definition to deployment and continuous monitoring—built to meet the toughest certification standards while ensuring transparency at every step.

Our Edge

01

Phase 1 | Design: Build Trust from the Start

-

Clear mission definition: Objectives, environment, and constraints are formalized up front to eliminate ambiguity.

-

Simulation-first approach: Digital twins and advanced simulators test drones, networks, and even cyberattacks virtually—before any real-world deployment.

-

Rich, certified datasets: Real-world sensor and telecom data are combined with synthetic datasets to ensure full coverage, fairness, and auditability.

Result: A foundation of requirements and validated datasets that are audit-ready and certifiable.

02

Phase 2 | Build & Test: Integrate Proof into Development

-

Advanced model development: Transfer learning, federated learning, and reinforcement learning—optimized for edge deployment with FPGA acceleration, pruning, and quantization.

-

Rigorous verification: Stress-tested against edge cases, noise, and adversarial attacks to guarantee robustness.

-

Explainability by design: Interpretability built into the model architecture, verified by human experts.

-

Automatic evidence generation: The pipeline produces assurance cases, safety arguments, and traceability matrices aligned with DO-178C and ISO/IEC standards.

Result: High-performance models that are not just powerful, but transparent, certifiable, and regulator-ready.

03

Phase 3 | Operate: Assure Reliability in the Field

-

High-performance deployment: Models run on optimized hardware (FPGA, SDR, GPU) for ultra-low latency in real-world environments.

-

Continuous assurance: Live monitoring detects drift, triggers re-training when needed, and ensures rollback to safe versions.

-

Real-time dashboards: Total visibility into performance, integrity, and compliance, with automatic re-certification triggers if changes impact safety.

Result: A living AI system that stays compliant, trustworthy, and effective over time.

The Humanitas XAI Pipeline

01

Define scope & requirements

Translate stakeholder needs into certifiable AI requirements.

02

Performance assessment

Set measurable KPIs, reliability benchmarks, and failure modes.

03

Validation & testing

Validate data, scenarios, and AI models under expected conditions.

04

Explainability & bias checks

Demonstrate fairness, transparency, and coverage of decision paths.

05

Formal verification & assurance

Build structured safety cases using GSN (Goal Structuring Notation).

06

Software quality & configuration

Secure coding, strict version control, and audit logs.

07

Continuous monitoring

Drift detection, retraining, and anomaly tracking in real-world use.

08

Certification package generation

Compile audit-ready reports and evidence for regulators.

Certification Pipeline: Turning AI into Trustworthy AI

Our certification workflow transforms complex AI into audit-ready, regulator-approved systems.

Deliverables include: KPI dashboards, validation reports, bias analyses, safety cases, traceability matrices, and compliance logs.

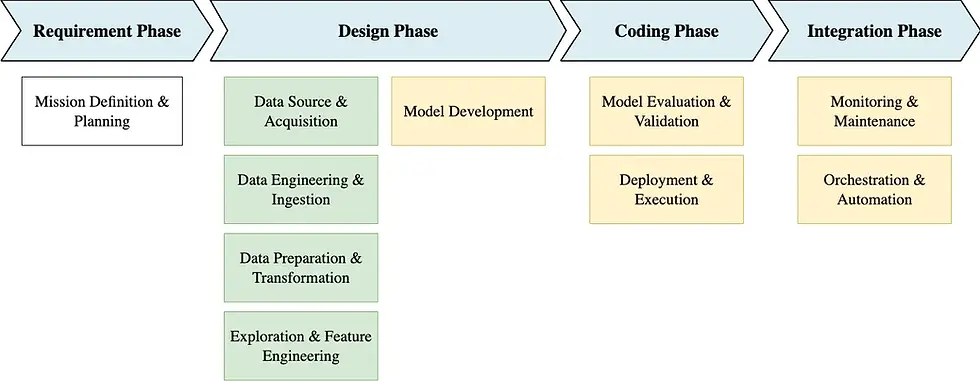

01

Requirement phase

Mission goals → certifiable specifications.

02

Design phase

Data engineered with documented provenance and integrity.

03

Coding phase

Models optimized and continuously tested for explainability.

04

Integration phase

Deployment coupled with supervision, retraining triggers, and live compliance dashboards.

Lifecycle Approach: Traceability at Every Step

At every stage of development, we integrate certification requirements and generate the proof regulators demand.

Every phase produces auditable artifacts, ensuring transparency from idea to operation.

01

Aerospace & Defense – Assured Autonomy

Trusted AI for aviation and defense requires certifiable autonomy. Our pipeline ensures every decision in drones, aircraft, and mission systems is transparent, compliant, and safe.

02

Telecom – Explainable Connectivity

Smarter management of 5G, RF, and satellite systems with AI that optimizes spectrum, routing, and uptime—while remaining explainable and audit-ready.

03

Logistics & Supply Chain – Transparent Logistics

From fleet operations to warehouse automation, Humanitas AI powers accountable, real-time decision-making that improves efficiency and maintains compliance.

04

Regulated Enterprises – Responsible Enterprise AI

In finance, healthcare, and insurance, AI must be responsible and auditable. Our pipeline provides the traceability and compliance required to innovate with confidence.

Real-World Applications

Why It Matters

When AI powers critical decisions, performance alone isn’t enough.

Humanitas XAI combines power + proof: robust intelligence that is transparent, compliant, and certifiable—so organizations can innovate without compromising trust.